Newsletter Subscribe

Enter your email address below and subscribe to our newsletter

Enter your email address below and subscribe to our newsletter

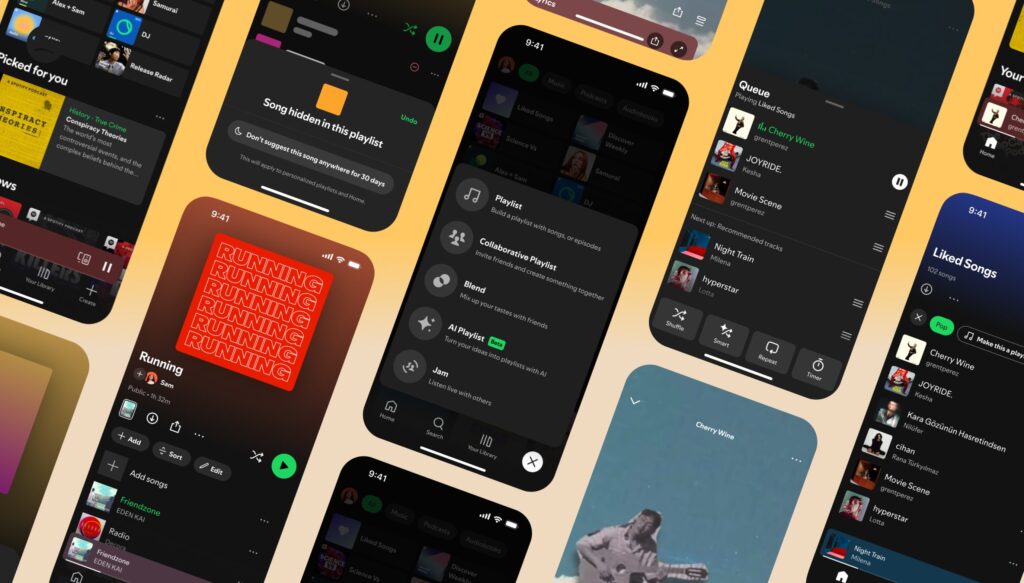

Spotify has started rolling out age verification for UK users, limiting access to certain content unless they confirm their age. The move comes in response to the Online Safety Act, a new law aimed at protecting children from harmful online material.

According to The Independent, the music streaming giant now requires users to verify their age before accessing content marked as “adult.” If the system detects that a user is under 13 — the minimum age to register for Spotify — their account could be removed altogether.

The law doesn’t just target explicit websites; it applies to any platform that might expose minors to potentially harmful content. As a result, even streaming services like Spotify are tightening their content access policies.

To comply with the new requirements, Spotify has partnered with Yoti, a UK-based digital identity company. Yoti’s system uses facial analysis via smartphone camera to estimate a user’s age — and promises to delete the image immediately after verification, ensuring data privacy.

Spotify says the check is necessary to access specific types of content, such as music videos labeled 18+ by rights holders. If facial verification fails or returns incorrect results, users can manually upload ID documents through their account settings.

Reddit and mod-sharing platform Nexus Mods have already introduced similar age verification systems in response to the same law.

As the UK ramps up efforts to create a safer digital space for young people, expect more platforms to follow suit — one facial scan at a time.